Prompt Engineering Techniques

Anonymous • December 24, 2023

Prompt engineering is a critical discipline within the field of artificial intelligence (AI), particularly in the domain of Natural Language Processing (NLP). It involves the strategic crafting of text prompts that effectively guide LLMs

Fundamentals of Prompt Engineering Techniques

Prompt engineering is a critical discipline within the field of artificial intelligence (AI), particularly in the domain of Natural Language Processing (NLP). It involves the strategic crafting of text prompts that effectively guide transformer-based language models, such as Large Language Models (LLMs), to produce desired outputs. This section delves into the foundational aspects of prompt engineering, elucidating the techniques and considerations pivotal to the practice.

1.1 Understanding Zero-Shot & Few-Shot Prompting

Zero-shot and few-shot prompting are methodologies employed in prompt engineering to elicit accurate responses from LLMs without extensive training on specific datasets. Zero-shot prompting refers to the model's ability to interpret and respond to a task without any prior examples. It relies on the model's pre-existing knowledge and understanding derived from its training data. In contrast, few-shot prompting provides the model with a limited number of examples to guide its responses. This approach leverages the model's pattern recognition capabilities to infer the desired output format and content based on the provided samples.

Both zero-shot and few-shot prompting are instrumental in evaluating the flexibility and adaptability of LLMs. They serve as benchmarks for the model's capacity to generalize from its training and apply learned concepts to novel scenarios. The choice between zero-shot and few-shot prompting hinges on the complexity of the task and the specificity of the desired output.

1.2 The Role of Chain of Thought in Prompt Design

Chain of thought (CoT) prompting is a technique that involves structuring prompts to mimic human-like reasoning processes. By incorporating a sequence of logical steps within the prompt, CoT enables LLMs to tackle complex problems that require multi-step reasoning. This approach not only enhances the transparency of the model's thought process but also improves the accuracy and coherence of the responses.

In prompt design, the chain of thought is articulated through a series of statements or questions that lead the model through the necessary reasoning stages. The effectiveness of CoT prompting is contingent upon the clarity and logical progression of these statements. When executed proficiently, CoT prompting can significantly elevate the model's performance on tasks such as arithmetic reasoning, causal inference, and problem-solving.

1.3 Leveraging Self-Consistency for Improved Responses

Self-consistency in prompt engineering refers to the practice of designing prompts that encourage the model to generate responses that are not only accurate but also consistent across different iterations and variations of the prompt. This is particularly important in scenarios where the model is expected to provide reliable and stable outputs over time.

To leverage self-consistency, prompts are often structured to include checkpoints or validation steps that the model must satisfy before proceeding to the final response. This iterative approach ensures that the model's outputs are internally coherent and aligned with the established facts or guidelines. Self-consistency is a valuable attribute in applications where precision and reliability are paramount, such as in legal document analysis, medical diagnosis, and financial forecasting.

By understanding and applying these fundamental prompt engineering techniques, practitioners can harness the full potential of LLMs, enabling them to perform a wide array of tasks with greater accuracy and efficiency.

Advanced Prompting Techniques

In the realm of artificial intelligence, particularly within the context of large language models (LLMs), the art of prompt engineering has emerged as a critical skill. This section delves into advanced prompting techniques that extend beyond the foundational methods of zero-shot and few-shot prompting. These techniques are designed to refine the interaction with LLMs, enhancing their learning capabilities and enabling them to tackle more complex tasks with greater precision.

2.1 Iterative Prompting for Enhanced Learning

Iterative prompting is a technique that involves the gradual refinement of prompts through successive iterations. This method leverages the feedback loop between the user and the LLM to incrementally improve the model's responses. By analyzing the output of each prompt, the engineer can make subtle adjustments to the input, thereby guiding the model towards a more accurate understanding of the task at hand.

For example, consider a scenario where an LLM is tasked with generating code snippets. The initial prompt may yield a functional but suboptimal code. Through iterative prompting, the engineer can refine the prompt by incorporating specific coding standards or best practices, prompting the model to revise its output accordingly.

Initial Prompt:

"Write a Python function to calculate the factorial of a number."

Refined Prompt:

"Write a Python function to calculate the factorial of a number, using recursion and adhering to PEP 8 style guidelines."

This process not only enhances the quality of the model's responses but also contributes to the model's pseudo-learning, as it adapts to the evolving requirements specified in the prompts.

2.2 Sequential Prompting for Complex Tasks

Sequential prompting is a sophisticated technique that involves breaking down complex tasks into a series of simpler, sequential prompts. This method is particularly useful when dealing with multi-step problems or tasks that require a layered approach to reasoning.

By structuring the interaction as a sequence of prompts, each building upon the previous response, the LLM can navigate through the complexity of the task in a controlled and coherent manner. This approach mirrors human problem-solving strategies, where a larger problem is divided into manageable segments.

Consider the task of creating a comprehensive report on a technical subject. The sequential prompting might look like this:

First Prompt:

"List the key concepts involved in blockchain technology."

Second Prompt:

"Explain the principle of decentralization in blockchain."

Third Prompt:

"Describe the process of a transaction in a blockchain network."

Each prompt in the sequence is designed to elicit specific information that contributes to the overall understanding of the topic, culminating in a detailed and structured final output.

2.3 Meta-Prompting: Prompts that Teach Prompting

Meta-prompting represents the pinnacle of prompt engineering, where the prompts are designed not only to extract information or responses from the LLM but also to teach the model how to improve its own prompting strategies. This recursive approach to prompting enables the LLM to reflect on its performance and adjust its subsequent prompts based on the insights gained.

This technique is akin to meta-cognition in humans, where the awareness of one's thought processes can lead to self-improvement. In the context of LLMs, meta-prompting can be used to develop more autonomous and self-regulating AI systems.

An example of meta-prompting might involve asking the LLM to evaluate the effectiveness of a given prompt and suggest modifications to enhance its clarity or specificity:

Meta-Prompt:

"Assess the clarity of the following prompt: 'Write an essay about the environment.' Suggest improvements to make the prompt more specific and goal-oriented."

Through meta-prompting, the LLM can learn to generate prompts that are more targeted and effective, thereby improving its interaction with users and its performance on complex tasks.

Prompt Engineering in Practice

The discipline of prompt engineering is pivotal in the realm of artificial intelligence, particularly within the context of natural language processing (NLP). It is a nuanced field that requires a deep understanding of language models and their interaction with various prompts. This section delves into practical applications of prompt engineering, showcasing its significance through case studies and domain-specific applications.

3.1 Case Studies: Effective Prompt Engineering Examples

Prompt engineering has been instrumental in enhancing the performance of language models across a multitude of tasks. By examining real-world case studies, we can glean insights into the methodologies and techniques that have led to successful outcomes.

One notable example is the utilization of prompts in customer service chatbots. By engineering prompts that anticipate common queries and guide the conversation, businesses have been able to provide swift and accurate responses to customer inquiries. This not only improves customer satisfaction but also streamlines the support process, reducing the workload on human agents.

Another case study involves the use of prompts in content generation. Media companies have leveraged prompt engineering to create article outlines, headlines, and even full drafts. The prompts are designed to elicit creative and coherent text from language models, which can then be fine-tuned by human editors. This collaborative approach between AI and humans has led to increased efficiency in content production.

In the realm of education, prompt engineering has been applied to create personalized learning experiences. By crafting prompts that adapt to a student's learning style and knowledge level, educational platforms can provide tailored tutoring sessions. This adaptive prompting helps students grasp complex concepts more effectively and fosters a more engaging learning environment.

These case studies underscore the transformative impact of prompt engineering in various sectors, highlighting its role in optimizing the interaction between humans and AI.

3.2 Prompt Engineering for Domain-Specific Applications

The versatility of prompt engineering extends to domain-specific applications, where the intricacies of specialized knowledge are paramount. In these contexts, prompts are meticulously crafted to incorporate industry-specific terminology and concepts, ensuring that the language model's responses are both accurate and relevant.

In the healthcare sector, for example, prompt engineering is used to assist medical professionals in diagnosing conditions and suggesting treatments. Prompts are designed to interpret medical jargon and patient data, providing support in decision-making processes.

Legal professionals also benefit from prompt engineering. By inputting prompts that contain legal precedents and statutes, language models can assist in drafting legal documents and providing preliminary advice. This application requires a high degree of precision in the prompts to ensure that the generated content adheres to legal standards.

In the technical domain, engineers and developers use prompt engineering to generate code snippets, troubleshoot issues, and document software. The prompts must be highly specific and structured to yield accurate and functional code, reflecting the technical expertise required in this field.

These domain-specific applications of prompt engineering demonstrate its capacity to enhance the capabilities of language models, making them invaluable tools for professionals across various industries.

Optimizing Prompts for AI Performance

In the realm of artificial intelligence, particularly within the scope of natural language processing (NLP), the efficacy of AI models is significantly influenced by the quality of prompts used to interact with them. This section delves into the methodologies and strategies for optimizing prompts, ensuring that AI models not only comprehend the tasks at hand but also generate responses with heightened accuracy and relevance.

4.1 Fine-Tuning Prompts for Accuracy and Relevance

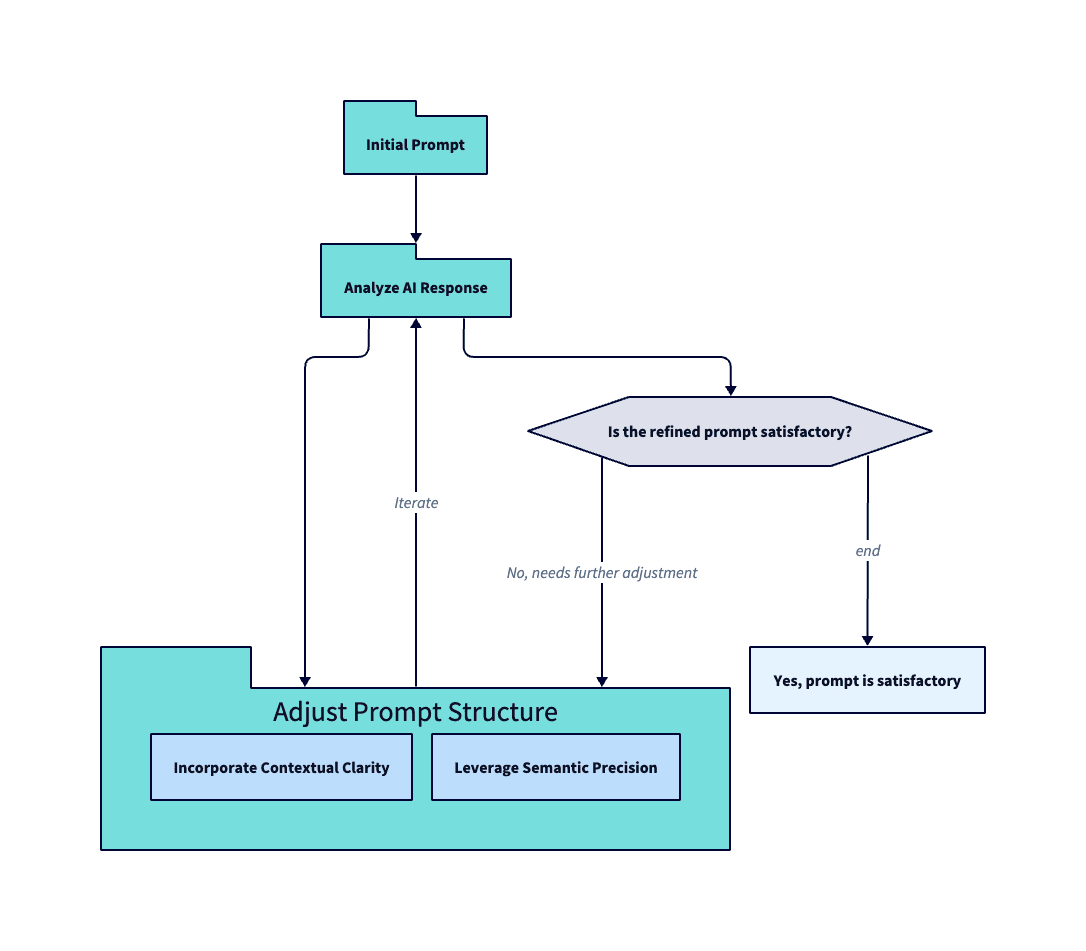

The process of fine-tuning prompts is a meticulous endeavor that involves the strategic modification of input to elicit the most accurate and relevant outputs from AI models. This subsection explores the intricacies of prompt engineering techniques that are pivotal in refining the interaction between humans and AI.

Understanding the Impact of Prompt Structure

The structure of a prompt can dramatically affect the performance of an AI model. A well-structured prompt clearly conveys the task, context, and expected format of the response. For instance, when querying an AI for a summary, the prompt should explicitly state the length and style of the summary desired. This precision in prompt structure guides the AI to generate outputs that align closely with user expectations.

Incorporating Contextual Clarity

Contextual clarity within prompts is essential for achieving high levels of accuracy in AI responses. By providing detailed background information and setting the scene for the AI model, prompts can be tailored to produce more nuanced and contextually appropriate outputs. For example, when asking an AI to generate content on a technical subject, including specific details such as the target audience and technical depth can significantly enhance the relevance of the generated content.

Leveraging Semantic Precision

Semantic precision in prompts refers to the careful selection of words and phrases that precisely define the task for the AI model. This involves avoiding ambiguity and ensuring that the language used in the prompt unambiguously directs the AI towards the desired outcome. For instance, instead of asking an AI to "write about solar energy," a semantically precise prompt would be "explain the process of photovoltaic energy conversion in solar panels for an undergraduate physics textbook."

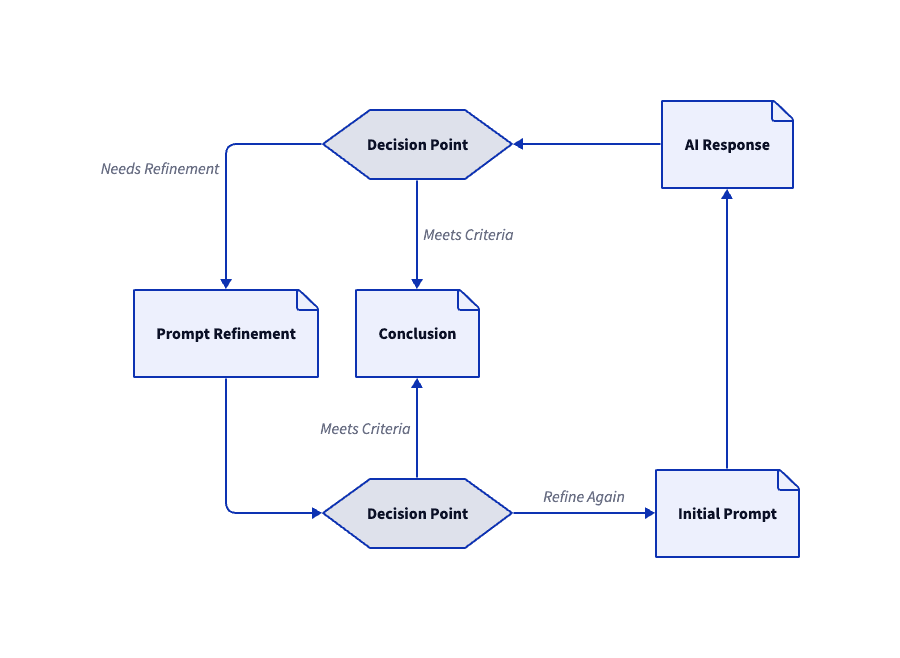

4.2 Evaluating and Iterating on Prompt Effectiveness

The evaluation and iteration of prompts are critical steps in the prompt engineering process. This subsection outlines the methodologies for assessing prompt effectiveness and the iterative techniques used to refine prompts based on performance feedback.

Analyzing AI Response Quality

To evaluate the effectiveness of a prompt, one must analyze the quality of the AI's response. This analysis includes assessing the accuracy, relevance, and coherence of the information provided. Metrics and qualitative assessments are used to determine if the response meets the standards set forth by the prompt. If discrepancies are found, the prompt must be adjusted accordingly.

Iterative Refinement of Prompts

Iterative refinement is the process of continuously adjusting prompts based on the AI's performance. This involves a cycle of testing, analyzing, and tweaking prompts to enhance the quality of AI responses. For example, if an AI model consistently misinterprets a particular type of prompt, adjustments are made to the wording or structure of the prompt to correct this issue.

Utilizing A/B Testing

A/B testing is a method used to compare the effectiveness of different prompts. By presenting two variations of a prompt to the AI model and comparing the resulting outputs, developers can determine which prompt leads to better performance. This empirical approach allows for data-driven decisions in the optimization of prompts.

In summary, optimizing prompts for AI performance is a systematic process that requires a deep understanding of prompt engineering techniques. By fine-tuning the structure, context, and semantics of prompts, and employing rigorous evaluation and iterative refinement, developers can significantly enhance the accuracy and relevance of AI-generated content.

The Future of Prompt Engineering

The domain of prompt engineering is rapidly evolving, with new methodologies and applications emerging at the intersection of machine learning and natural language processing. This section explores the future trajectory of prompt engineering, focusing on the latest trends and the ethical considerations that accompany the advancement of this field.

5.1 Emerging Trends in Prompt Engineering

Prompt engineering is a dynamic field that is continuously influenced by the latest advancements in artificial intelligence (AI) and machine learning (ML). As we look to the future, several trends are poised to shape the development and application of prompt engineering techniques.

One significant trend is the move towards more sophisticated prompting methods that enable deeper and more nuanced interactions with large language models (LLMs). These methods include iterative and recursive prompting, which involve the use of prompts that build upon previous responses to guide the AI towards a more refined output. This approach can be particularly effective for complex tasks that require a series of logical steps or for generating content that builds on a specific theme or narrative.

Another trend is the integration of multimodal inputs, where prompts are not limited to text but can include images, audio, and other data types. This expansion allows LLMs to process and generate content that reflects a richer understanding of the world, akin to human perception. For instance, an AI could be prompted with a photograph and a text description to generate a story that combines visual and textual elements.

The use of meta-prompts is also gaining traction. These are prompts that instruct the AI on how to generate its own prompts, effectively teaching the model to become more autonomous in its learning process. This self-referential approach can lead to more efficient use of AI capabilities and reduce the need for human intervention in prompt design.

Furthermore, the application of prompt engineering is expanding beyond traditional text-based tasks. In fields such as healthcare, finance, and legal services, prompt engineering is being used to create domain-specific applications that require a deep understanding of industry jargon and concepts. This specialization is leading to more accurate and relevant AI-generated content, which can assist professionals in decision-making processes.

Lastly, the ethical dimension of prompt engineering is becoming a focal point. As AI systems become more integrated into societal functions, the responsibility to design prompts that are fair, unbiased, and respectful of privacy increases. This aspect of prompt engineering will likely receive more attention as the technology matures and its implications become more apparent.

5.2 Ethical Considerations in Prompt Design

The ethical considerations in prompt design are multifaceted and critical to the responsible deployment of AI technologies. As prompt engineers, it is imperative to ensure that the prompts we create do not perpetuate biases, infringe on privacy, or lead to the dissemination of misinformation.

One of the primary ethical concerns is the potential for AI to generate biased responses based on the data it has been trained on. To mitigate this risk, prompt engineers must be vigilant in crafting prompts that are neutral and designed to elicit unbiased outputs. This may involve the use of balanced datasets for training and the implementation of checks to detect and correct for biases in the AI's responses.

Privacy is another key consideration. Prompts must be constructed in a way that respects the confidentiality of personal data and complies with data protection regulations. This is especially pertinent when AI is used in applications that handle sensitive information, such as medical records or financial transactions.

Moreover, the accuracy and truthfulness of AI-generated content are of utmost importance. Prompt engineers have a responsibility to design prompts that encourage factually correct outputs and discourage the generation of false or misleading information. This is particularly relevant in the context of news generation, academic research, and other areas where the integrity of information is paramount.

Finally, the potential for AI to generate harmful content, whether intentionally or inadvertently, must be addressed. Prompts should be designed with safety mechanisms that prevent the generation of content that could be offensive, discriminatory, or incite violence. This involves not only careful prompt design but also ongoing monitoring and refinement of AI outputs.

In conclusion, the future of prompt engineering is one of both exciting possibilities and significant responsibilities. As the field advances, prompt engineers must remain committed to the ethical principles that ensure AI technologies are used for the benefit of society as a whole.